In today's tech landscape, one term continues to steal the limelight: Generative AI. Its profound impact on various industries and its ability to create content indistinguishable from human creation has sparked widespread interest.

But what exactly is Generative AI?

What is Generative AI?

At its core, Generative AI is an advanced subset of artificial intelligence that operates on the principle of utilizing neural networks to craft new, realistic data reminiscent of what it has been trained on.

Machine Learning: The foundation of Generative AI lies in machine learning, where algorithms learn patterns and make predictions or decisions based on data.

Neural Networks: Inspired by the human brain's neural structure, neural networks are the backbone of deep learning, allowing machines to learn and make sense of complex data.

Deep Learning: A subset of machine learning, deep learning involves training neural networks with vast amounts of data to perform tasks like image and speech recognition.

How does Generative AI work?

Generative AI models harness the power of neural networks to discern intricate patterns and structures within existing datasets, enabling the creation of new and authentic outputs.

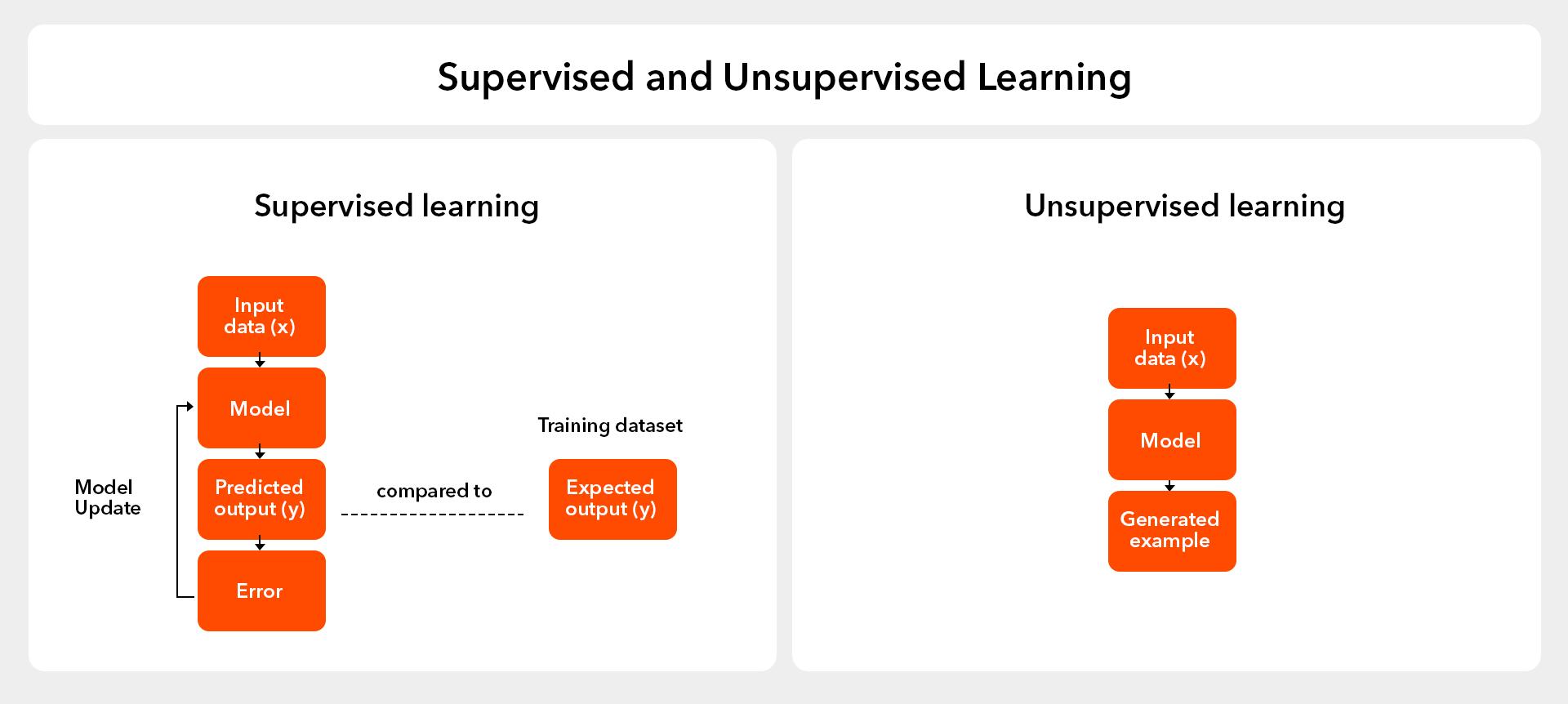

A significant advancement in Generative AI is its ability to effectively utilize different learning approaches. In particular, unsupervised or supervised learning plays a prominent role during training, enabling computers to use existing content like text, audio, images, video files and even code to create new possible content.

These advancements have enabled organizations to make efficient use of large amounts of unlabeled data, which in turn facilitates the development of foundational models.

Generative AI models

While bigger datasets are one catalyst that led to the generative AI boom, a variety of major research advances led to the most widely used generative AI models: Diffusion models, Generative Adversarial Networks (GANs), and Transformer-based models.

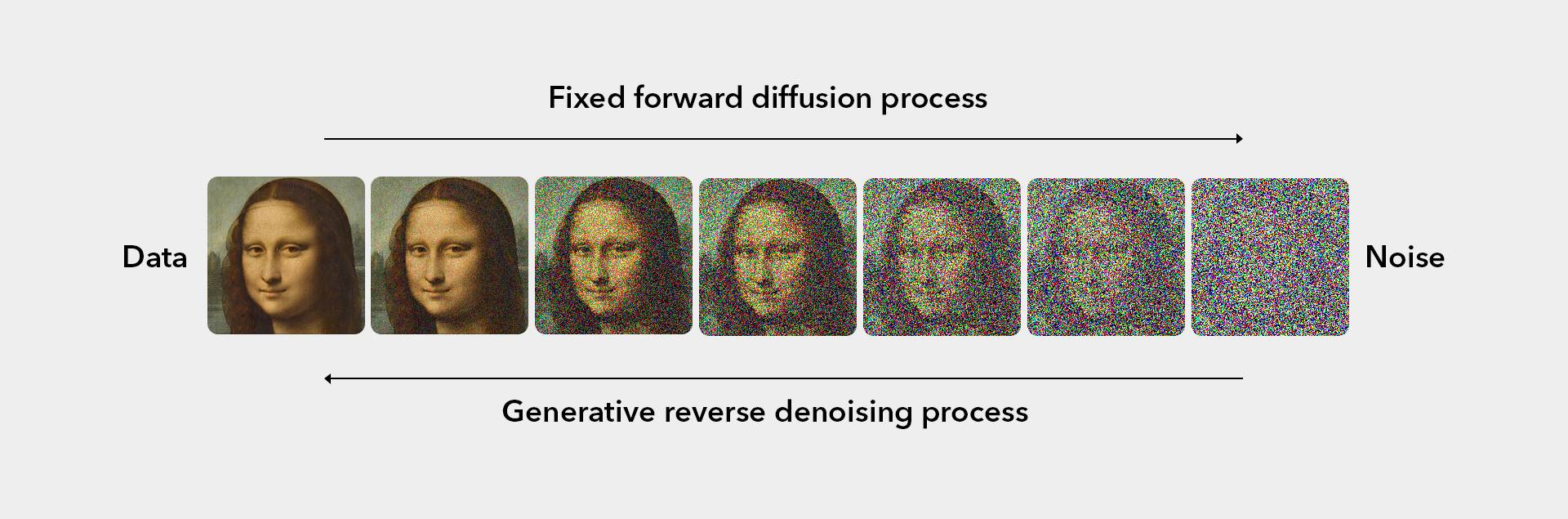

Diffusion model

The term "diffusion models" or denoising diffusion probabilistic models (DDPMs) refers to a type of generative AI model. In the training process of diffusion models, noise is slowly diffused or spread out to create complex data. By reversing this process, starting from random noise, new and unique data can be generated.

To make this more tangible, let's use a puzzle analogy:

- Initial Scattering of Puzzle Pieces:

Imagine you have a puzzle box filled with pieces scattered randomly on a table. Each piece represents a pixel or element of data. - Piece Shuffling (Noise Diffusion):

In the diffusion model, the pieces are shuffled randomly, simulating the diffusion of noise.

This step represents the iterative diffusion process in diffusion models. - Gradual Assembly (Noise Reduction):

Now, with each shuffle, neighboring pieces that belong together start coming closer.

As diffusion progresses, the puzzle begins to take shape. - Completion of the Puzzle (Data Synthesis):

After multiple rounds of shuffling and gradual assembly, the puzzle is completed.

In the context of diffusion models, the final assembled puzzle represents the synthetic data generated by the model.

Generative Adversarial Networks

A generative adversarial network (GAN) is a machine learning algorithm that puts two neural networks, a generator and a discriminator, against each other in an adversarial manner. The goal of this duet is to create synthetic data that is indistinguishable from real data. To grasp this, let's consider an analogy: a forger (generator) trying to create counterfeit art, and an art detective (discriminator) trying to spot the fakes.

The Generator (Forger):

- The generator's job is to create synthetic data that is so realistic that it can pass as genuine.

- It takes random noise as input and transforms it into data (e.g., images, text) that ideally mirrors the training data it has been exposed to.

- In our analogy, the forger aims to create artwork that is so authentic that it fools the art detective.

The Discriminator (Art Detective):

- The discriminator's role is to differentiate between real and synthetic data.

- It is trained on real data and the generated data from the generator.

- The discriminator assigns a probability to the input data, indicating how likely it is to be real.

- In our analogy, the art detective examines a painting and determines the likelihood of it being genuine.

Now, let's walk through the GAN training process:

Step 1: Initialization

- The generator starts with random noise as input and generates synthetic data.

- The discriminator is initialized and trained on both real and synthetic data.

Step 2: Initial Feedback Loop

- The discriminator evaluates the synthetic data from the generator and provides feedback on its authenticity.

- The generator uses this feedback to improve its ability to create more realistic data.

Step 3: Iterations

- The generator and discriminator go through a series of iterations, refining their abilities.

- The generator adapts to the discriminator's feedback, learning to create data that is increasingly difficult to distinguish from real data.

- Simultaneously, the discriminator refines its ability to differentiate between real and synthetic data.

Step 4: Convergence

- Ideally, the generator becomes so adept at creating realistic data that the discriminator struggles to tell whether the input is real or generated.

- This equilibrium is the point of convergence, where the generator produces high-quality synthetic data.

Transformer architecture

In 2017, Google researchers introduced the revolutionary transformer architecture, which has changed natural language processing and various tech applications. This groundbreaking model, used to develop large language models like ChatGPT, processes sequential input data in a non-sequential manner. Let's use an analogy to better understand how transformer-based models work:

Imagine an office where information flows seamlessly through specialized employees called "attention heads." These attention heads collaborate within a unique hierarchical structure, much like the open-plan design of an office. This dynamic workplace operates like this:

The Office Layout (Architecture):

- The office is divided into departments, each managed by a supervisor (Encoder Layer).

- The supervisors communicate with each other, ensuring that information flows seamlessly across the entire office (Multi-Head Attention).

Communication within Departments (Self-Attention Mechanism):

- Inside each department, employees (attention heads) focus on specific aspects of information, akin to attending to different words in a sentence.

- The self-attention mechanism allows these employees to consider the importance of other words in the sentence while focusing on their assigned task.

Passing Messages (Feedforward Networks):

- After attending to relevant information, the employees pass messages to each other, summarizing what they've learned (Feedforward Networks).

- This communication is crucial for refining the understanding of the entire sentence.

Bringing in Experts (Encoder-Decoder Architecture):

- For more complex tasks, the office brings in specialized teams (Encoder and Decoder).

- The Encoder focuses on understanding the input information, while the Decoder generates the output, both employing attention heads for effective collaboration.

Transformer training process:

Step 1: Input Processing

- Imagine a sentence entering the office. The employees in each department (attention heads) pay attention to specific words, understanding their roles in the overall context.

- Supervisors (Encoder) ensure that each department processes the information thoroughly.

Step 2: Hierarchical Collaboration

- The supervisors communicate with each other, sharing insights about the sentence.

- This hierarchical collaboration allows for a holistic understanding of the input.

Step 3: Task Delegation (Encoder-Decoder Interaction):

- For translation tasks, the Encoder processes the input language, and the Decoder generates the corresponding output language.

- Attention heads facilitate communication between these two departments, ensuring a smooth delegation of tasks.

Step 4: Output Generation

- The Decoder produces the final translated sentence, summarizing the information processed by the entire office.

Applications of Generative AI

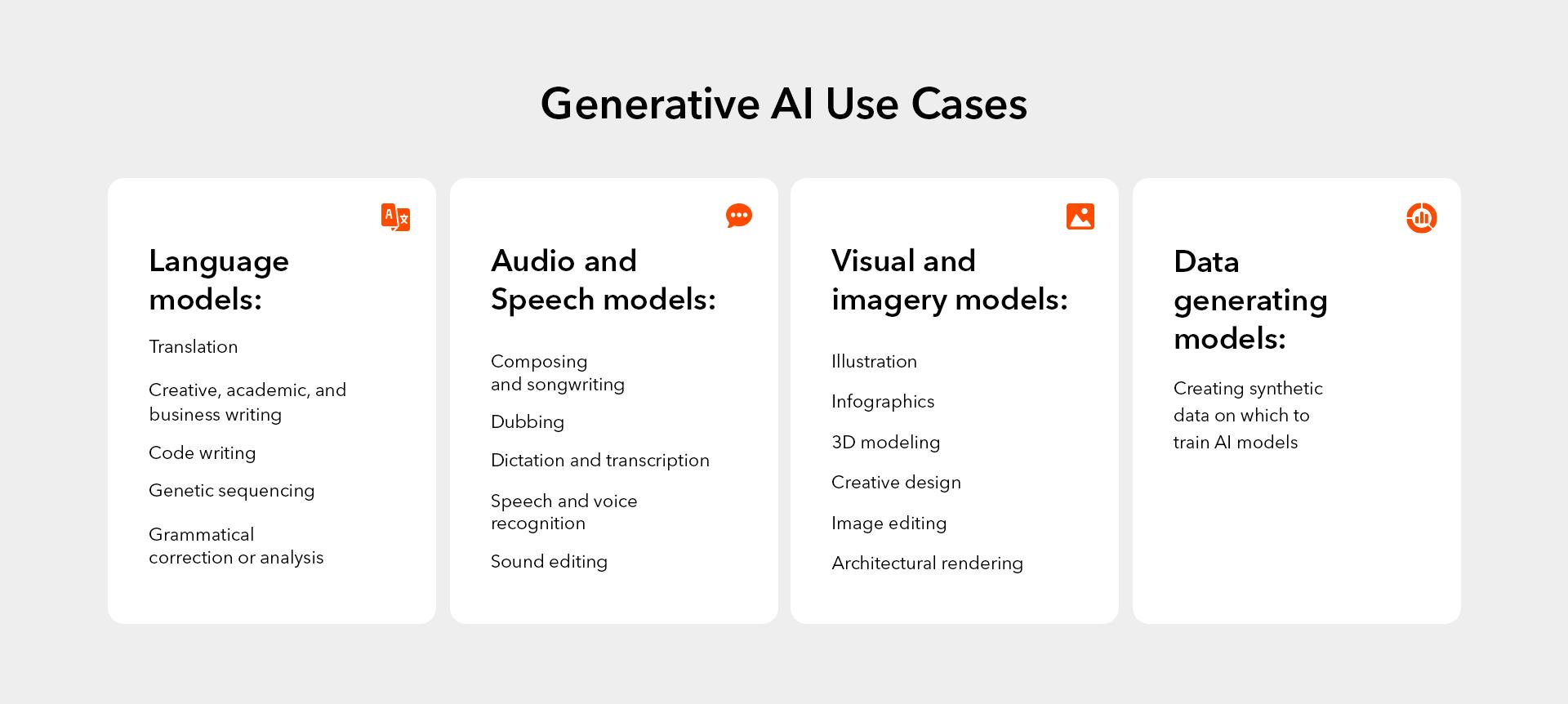

Now that we have explored the functionalities of Generative AI, it is clear that its prowess extends across various domains. It is a powerful tool for streamlining the workflow of creatives, engineers, researchers, scientists, and more.

Generative AI concerns

While Generative AI holds immense promise, its burgeoning capabilities come hand-in-hand with ethical considerations that demand our attention. As we usher in a new era of creativity and innovation, we must be vigilant about the potential pitfalls that could undermine the positive impact of Generative AI.

- Deepfake dilemma: At the forefront of concerns is the specter of deepfake technology. Generative AI's ability to craft hyper-realistic content raises the alarm for potential misuse. Deepfakes, fueled by sophisticated Generative AI models, can convincingly swap faces, voices, or even entire scenarios in videos, leading to a potential epidemic of misinformation, identity theft, and reputational damage.

- Bias in Generated Content: Another critical concern centers around perpetuating biases in generated content. If the training data used by Generative AI models carries inherent biases, the generated outputs may inadvertently reflect and even amplify those biases. This raises questions about fairness, inclusivity, and the potential reinforcement of societal stereotypes.

- Security Risks: The very nature of Generative AI, which empowers machines to create authentic-seeming content, poses potential security risks. From realistic phishing emails to convincingly forged documents, the technology opens avenues for malicious actors to exploit its creative potential.

- Concerns for businesses: Businesses should also exercise caution whenever implementing AI solutions in their processes since it allows for points of failure in the security layer. This is especially true when handling user data or when AI is used to handle important transactions within the business. Developers must exercise utmost care, constant supervision, and improvement.

What is the future of Generative AI?

As we peer into the future of Generative AI, we can envision several exciting possibilities:

- Creative Augmentation: Expect a renaissance in creativity as Generative AI becomes a key collaborator, generating art, music, and ideas that augment human ingenuity.

- Personalization Revolution: Generative AI will usher in a new era of personalized experiences, tailoring content, and interfaces to individual preferences.

- Leveraging AI for businesses: The implementation of AI technologies into businesses can streamline their workflows. Businesses can benefit from automated tasks, data analysis, and decision-making processes that can optimize performance and deliver valuable insights.

- Harmony Between Humans and Machines: Collaboration between humans and Generative AI will intensify, driving co-creation in problem-solving, design, and innovation.

- Ethical Advancements: As Generative AI evolves, we'll see a strong focus on ethical issues, ensuring transparency, responsible development, and bias mitigation.

- Transformative Impact Across Sectors: Generative AI's influence will spread far and wide, transforming sectors like healthcare and finance, and offering innovative solutions to global challenges.

In our exploration into the intricate world of Generative AI, we stand at the crossroads of innovation and accountability. This ever-evolving field offers incredible opportunities, yet it also brings ethical challenges to the forefront.

The future of Generative AI is not just about marveling at its capabilities — It calls for us to advocate for conscientious and responsible development. By promoting collaboration, prioritizing ethical guidelines, and staying vigilant about potential risks, we can collectively shape a future where Generative AI becomes a force for positive change. As we set out on the future with AI, it is crucial that we harness the potential of Generative AI responsibly for the greater good of our society.